Scalability and Microservices: A road to docker

Hi there, The goal of this article is to make you understand scalability and microservices and how it leads the road to Docker, but let's make it clear that scalability and microservices aren't the only reason to use Docker. It has many other advantages. Also, most of the time when I talk about microservices people start screaming Docker, So let's clear this as well "NO! Docker is not microservices and neither is using Docker the only way we can implement microservices" Microservices and Docker is just a good duo when it comes to implementing and scaling microservices, hence it's been adopted by big organizations for eg: Netflix, Spotify, IBM, etc.

So lets start understanding Scalability by considering a scenario: You made an application, hosted it on a server in the cloud or on-premises, and you were getting 10k users per second and your server running your application was easily able to serve your users.

This number 10k varies depending on the hardware specifications and how your code is handling the user requests, for eg: Node-based servers use asynchronous non-blocking IO as compared to Java or PHP based servers which use synchronous blocking IO and consequently, Node-based servers are able to handle much more concurrent requests. Coming back, your server was performing fine and then you had a new feature launched and suddenly some of your users started getting timeout errors.

So what happened here? You look at the logs and you see more than 100k requests at a time and your application wasn't able to process them all. So the user couldn't get the response back within time and there occurred a timeout (the timeout is generally around 100s). So you realized that your server wasn't able to handle this huge number of user requests simultaneously

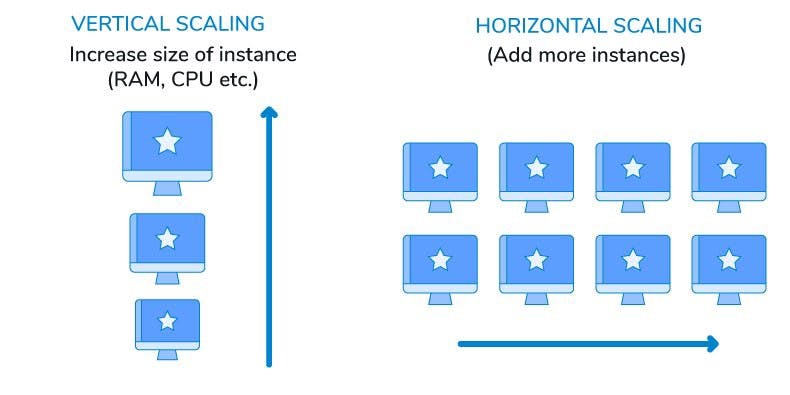

So you googled for a solution and the first result was: Do Vertical Scaling i.e increase your RAM size or get a better CPU or both. But there's a limit to this and on the other hand, your user base constantly grows day by day. Therefore, it isn't the best solution.

So you googled for something better than this and result was Do Horizontal Scaling i.e Buy multiple servers and host them in different regions and direct users from a region to the server hosted in that specific region, for eg: one server in India, one in the US, UK, and so on.

This was a good solution but soon you realized that only one server in a particular region is not enough as there was again a surge in the number of users. You were now getting 100k requests from each region and a single server couldn't 100k requests at a time.

So this time you didn't google rather you used the previous concept of horizontal scaling. So you deployed multiple servers in each and every region behind a Reverse Proxy A reverse proxy is just an another server that sits in front of your application servers and load balances the requests to these application servers. Now, if you are wondering what load balancing means, it simply means distributing all the incoming requests to different application servers so that every application server gets a similar amount of load i.e almost equal number of incoming requests. For eg: now your 100k incoming requests per second are being handled not by 1 application server but say 3 application servers. So 1 application server is now processing only 33k requests at a time and is easily able to do that. The reverse proxy is in charge of load balancing. For that all you need to do is specify the load balancing algorithm it should use along with the IP:PORT of your application servers. By default, round robin is the load balancing algorithm for most of the load balancers. So now you've deployed 3 to 4 servers in every region. So now your system is easily able to serve 300k to 400k incoming requests per region and everything works perfectly fine. Considering you have 3 such regions then you would be serving more than a million requests per second. "Congrats! that's a huge milestone achieved very simply."

This was an example of scaling up your system as the load increases. But scalability is not restricted to scaling up, it's also about being able to scale down during off-peak hours and this could really save you a lot of money. Scalability is about the flexibility to increase or decrease the resource usage as per demand or need.

Once all this is done, as your business grew, your application code was becoming huge and complex and as a result you started facing problems with maintaining your code and data on multiple servers. Adding new features and integrating 3rd party services became more and more tedious. Every time you try adding a new feature, you have to first take down the entire application from your server rebuild your new application with that new feature deploy it back on the server

This redeployment of a huge application was taking say more than 20 minutes sometimes, so you had a downtime of around 20 minutes. And that's a lot. As a consequence, you were losing customers. (There are some cheap tricks(workarounds) to mitigate this downtime as well but those are just workarounds and not solutions)

So you googled again and found that moving to microservices could solve this. So what is microservices? How does it solves the problem?

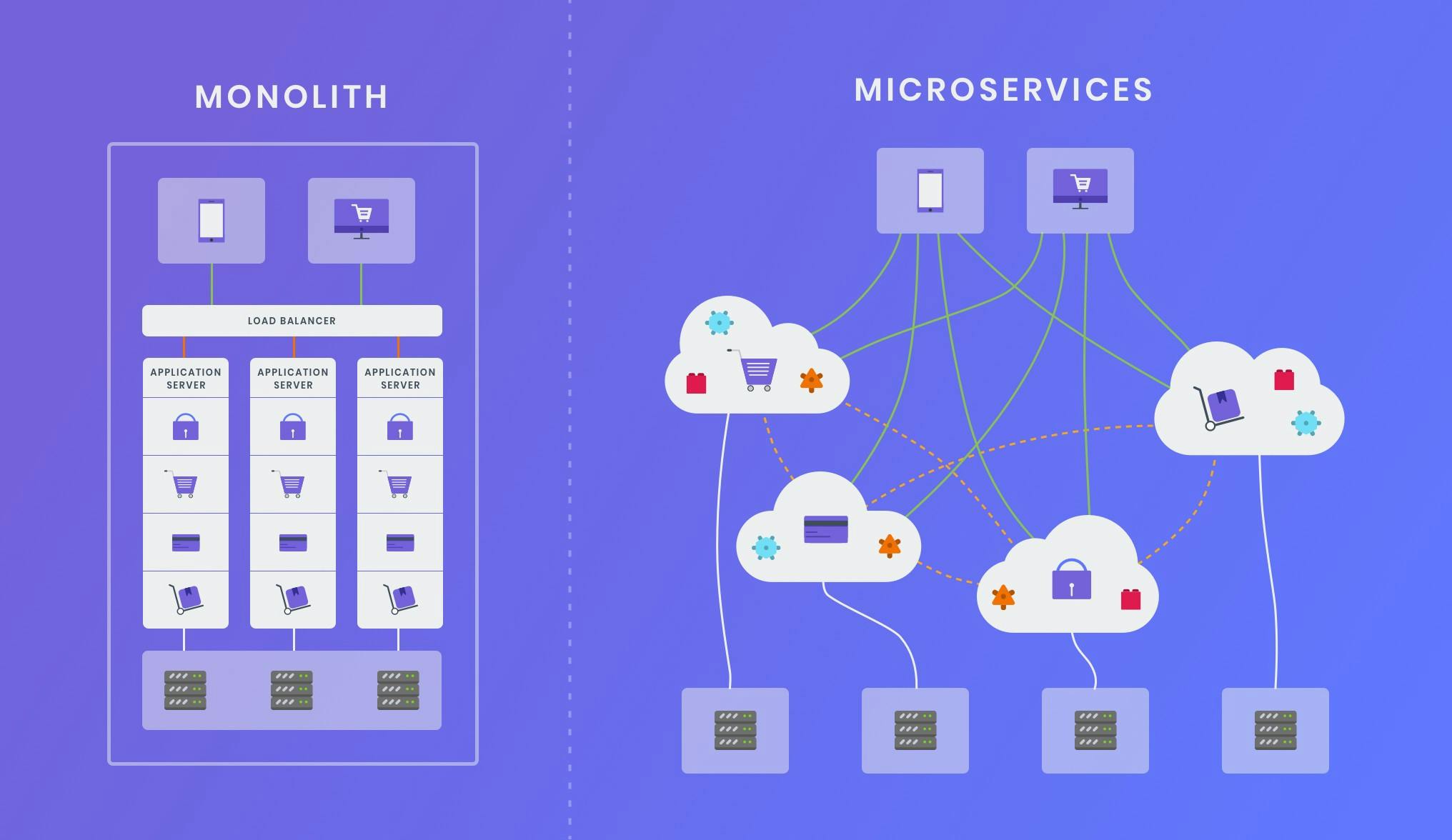

Microservices is just an architectural pattern that breaks a huge service into small(micro) services that can be tested, deployed independently, and scaled infinitely.

So you slowly and carefully started breaking down your huge, highly coupled application (called a Monolith in technical terms) into small independent services that could talk to each other and do what the monolith was doing.

Let's take a quick example of how you would go about doing this.

Let's consider you have a social networking site and whenever someone wants to follow a user, he/she clicks on the follow button of that user's profile and a request comes to your server and your monolith starts processing the request. It basically triggers a function that you've written to handle the 'follow' request.

So, that single function will

Firstly verify the SSL certificates (I know you'll say, "Wait, this is done by the reverse proxy we had set up" or "my hosting platform takes care of that for me" but for this example, let's consider we don't have these yet and we are in the stone age where we implement everything from scratch.)

Next, it will authenticate the user who's sending the request.

Then after performing some basic validations it creates a record in a table which stores the relation 'who's following whom'.

Then it creates and sends a notification for the user who's being followed.

And if all the above tasks succeed you send back a response with 200 stating "you are now following the user" or else you send 400s or 500s corresponding to the error occurred.

Microservices is just an architectural pattern that breaks a huge service into small(micro) services that can be tested, deployed independently, and scaled infinitely.

So you slowly and carefully started breaking down your huge, highly coupled application (called a Monolith in technical terms) into small independent services that could talk to each other and do what the monolith was doing.

Let's take a quick example of how you would go about doing this.

Let's consider you have a social networking site and whenever someone wants to follow a user, he/she clicks on the follow button of that user's profile and a request comes to your server and your monolith starts processing the request. It basically triggers a function that you've written to handle the 'follow' request.

So, that single function will

Firstly verify the SSL certificates (I know you'll say, "Wait, this is done by the reverse proxy we had set up" or "my hosting platform takes care of that for me" but for this example, let's consider we don't have these yet and we are in the stone age where we implement everything from scratch.)

Next, it will authenticate the user who's sending the request.

Then after performing some basic validations it creates a record in a table which stores the relation 'who's following whom'.

Then it creates and sends a notification for the user who's being followed.

And if all the above tasks succeed you send back a response with 200 stating "you are now following the user" or else you send 400s or 500s corresponding to the error occurred.

So now you are wondering what's wrong here? The steps are perfect but a single function doing all these steps is not a good design practice as it introduces coupling which makes maintenance of large scale projects complex and cumbersome. You will also see duplication of code as most of your functions that handle the user requests will always have the first 2 steps i.e verifying SSL certificates and authenticating the user which could lead to an increase in the size of your codebase.

Let's hope you are convinced enough to take the road of breaking this monolith down to small services(microservice) that would separately handle each step of your function. So you start by Firstly, creating an Authentication Microservice to authenticate your users. Next, you create a UserFollowing Microservice that would create a record of who's following whom. Then you create a Notification MicroService that sends a notification to the user being followed.

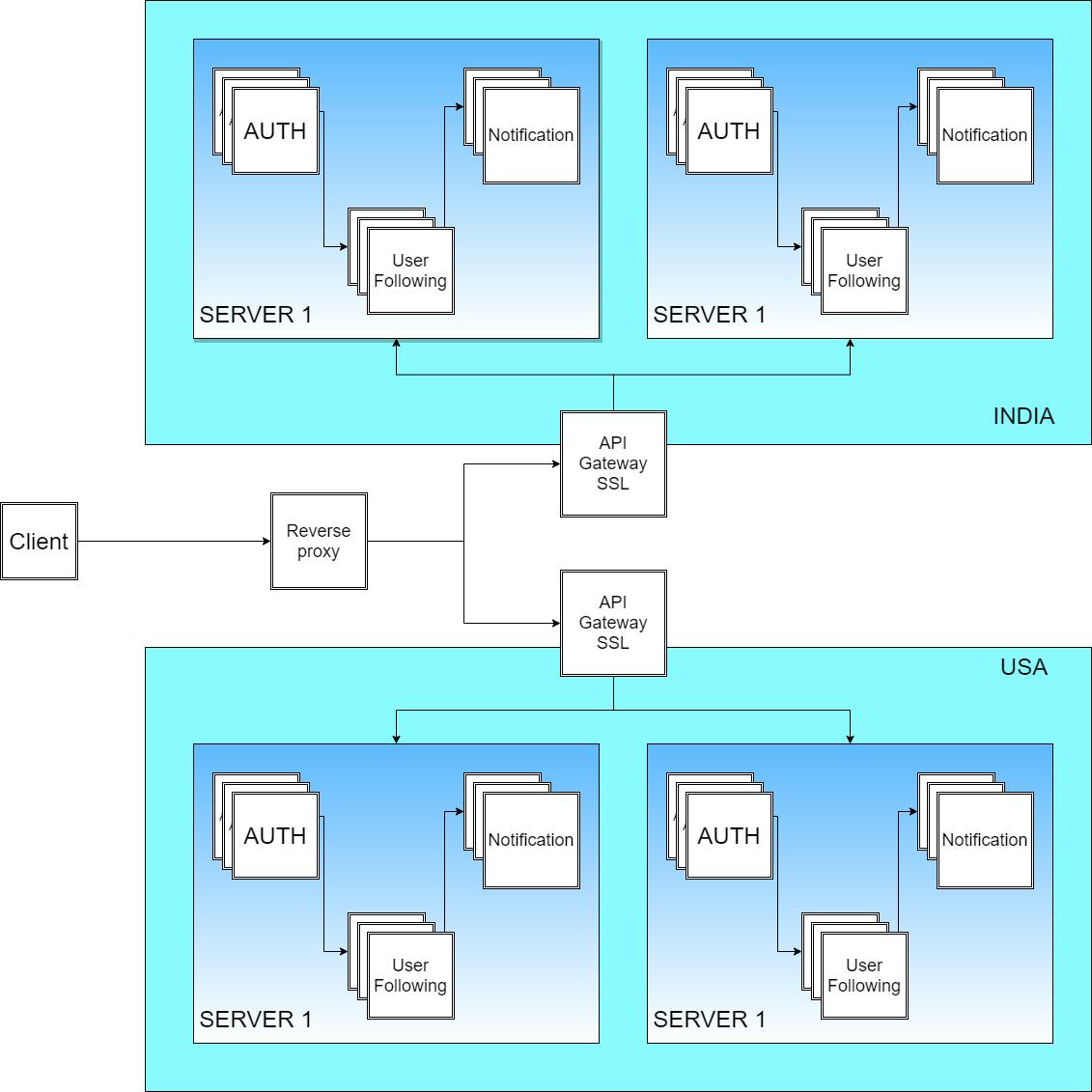

Now you would ask, "Hey, but what about the SSL certificates verification?" Well, we don't generally create a specific Microservice for that. We instead use an API Gateway. Think of API Gateway as an advanced reverse proxy we talked about earlier. We could have set up a reverse proxy as well, but that would involve writing a lot of configurations. Hence for large scale purposes, we use 3rd party platforms like Mulesoft or Apigee which provide these API gateways along with API management, analytics, security, and much more. So that organizations can delegate these non-functional requirements to a 3rd party service and focus more on business logic.

So the API Gateway will now be doing SSL certificates verification. Additionally, using this gateway, we don't need to implement Authentication and a microservice. For the sake of this example though, let's keep it as a Microservice as there's nothing wrong in doing so, it's just not a smart decision.

So now, the request flow will be,

As soon as the user clicks 'follow', the requests will come from the proxy to the API gateway.

API gateway will do the SSL verification.

Then the Authentication Microservice will be called to authenticate the user.

Then the request will go to the UserFollowing Microservice which will create the record.

Then the Notification Microservice will be called and it will send the notification.

So now, the request flow will be,

As soon as the user clicks 'follow', the requests will come from the proxy to the API gateway.

API gateway will do the SSL verification.

Then the Authentication Microservice will be called to authenticate the user.

Then the request will go to the UserFollowing Microservice which will create the record.

Then the Notification Microservice will be called and it will send the notification.

So, now we have decoupled the entire process flow of a Monolith into separate microservices that work together to do the same thing. Isn't that interesting? Now we have to figure out how do we deploy these microservices? Well you might say "Ohh!, It's pretty easy, run these microservices on separate ports, Authentication Microservice on 3000, Notification Microservice on 4000, API gateway on some other port on your operating system and communicate over the network using ReST APIs" and believe me this works like a charm.

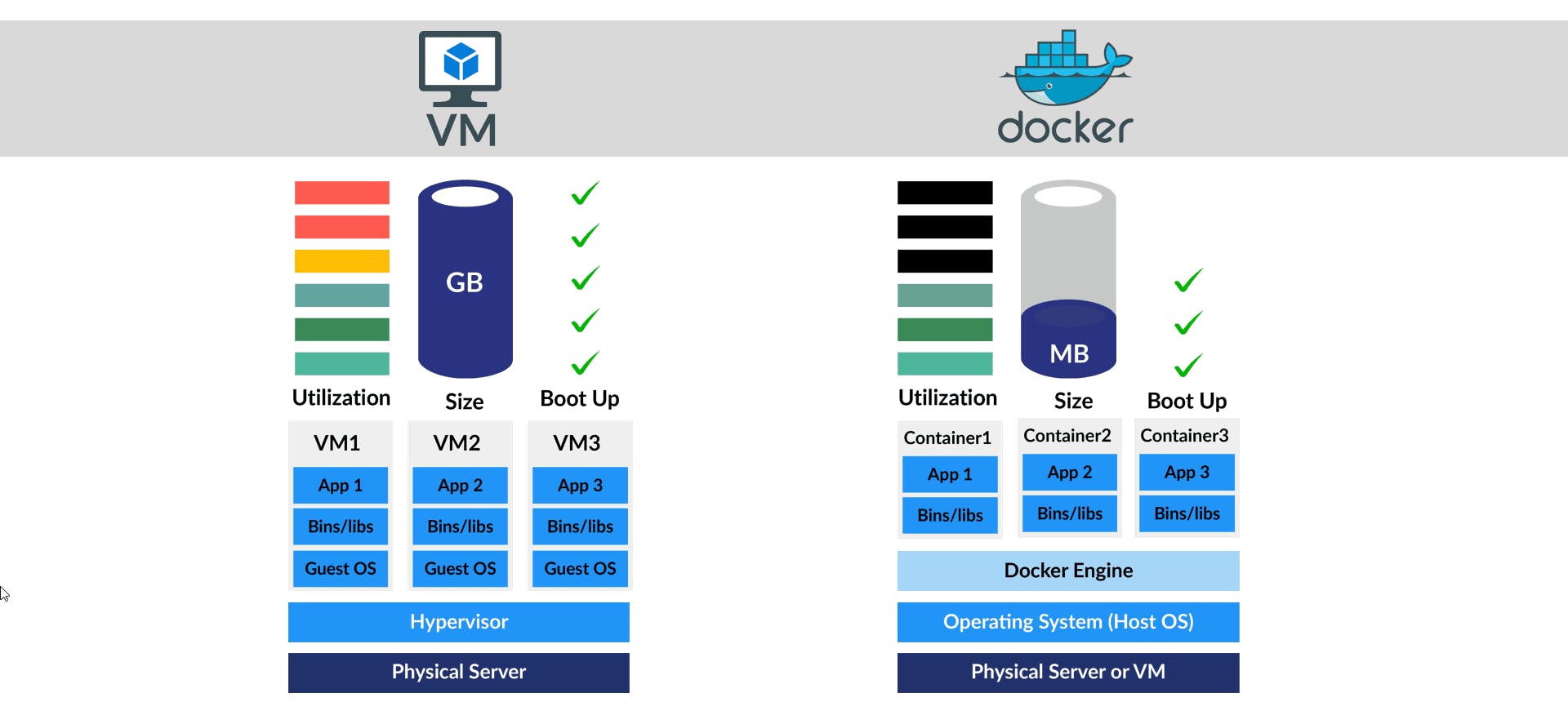

Now, let's say you created a new feature with the latest version of the underlying programming language/SDKs and updated your operating system's environment with the latest version. On doing so you realized that some other microservice that was built using an older version is not working now. This happened because the newer version removed some of the previous versions APIs that this Microservice(the one not working) was using. Or you might be using some third-party tools that require different versions of the same underlying dependency for eg: Software A needs Java 8 and Software B requires Java 11 and you can't install these two versions on the same OS. So what to do we now? Just what we use to do, Google it! Now we see that we can use Virtual Machines to solve this problem. Yes, the ones which we used for installing Kali Linux on Windows and do some hacking stuff or just to play around with different operating systems. Yes, exactly that. However, the utility of those VMs goes beyond simply playing around. We can actually use it to create an isolated environment on top of the OS or directly on top of the Hardware. "So how does it solve the above problem with different versions?" As you might have guessed, we can create different operating systems with the required dependencies for each microservice on top of the OS and run these microservices on that single server on different virtual machines. By doing so, every microservice will be working fine and the whole system would be up and running. Now, you might say "Hold on, the title has docker mentioned and he hasn't even mentioned docker yet" Well, I guess its time! Let's continue the pattern of discussing the problem followed by the solution. So, now you realized that these virtual machines were very slow to spin up every time you wanted to re-deploy the microservices after a change. It is similar to turning on your PC/Laptop which takes a lot of time (you will relate to this pain if you've ever accidentally clicked on 'restart' instead of 'shut-down' and now you have to wait for like an eternity just to shut it down again). Apart from taking too much time to boot up, VMs also use a whole lot of memory because we have a whole operating system that spins up a lot of other OS-specific processes that our app doesn't need at all. Our app just needs an OS with only the required dependencies and nothing else. And as you can see in the below diagram that's exactly what docker provides.

It just spins a docker daemon(background process) to virtualize your OS kernel and run these isolated environments called containers on top of the OS. This is very lightweight than having a separate OS and other libraries on top of it. One thing to note, unlike VMs that virtualize your hardware Docker virtualizes your OS kernel, hence if you are using a Linux distribution like Ubuntu you can't create a Windows-based environment(container) on top of Ubuntu but you can create environments(container) of other Linux distributions like Debian, Fedora, etc. So now we are saving upon memory and processing power. We are also spinning up these microservices which usually used to take a lot of time in the era of the virtual machines in significantly less amount of time. It hardly takes 20 secs to spin up a simple node-based application which is blazingly fast. So how do we go about deploying the system? We take each Microservice and create a docker file for it which describes how the docker image should be built. Run these images as containers using the Docker daemon.

So that's it, we have our microservices up and running. Clients can now start sending requests and this will work in the same manner as it was working when deployed with VMs. "So what about communication between these microservices?" Well, that stays exactly the same as before with just a little bit of configuration with Docker. "Finally, we saved a huge amount of development time and resource usage using Docker"

So what next? Do we have any other problems with this Docker and microservices approach? Yes, there are. As we introduced a lot of microservices, we are introducing new complexities of communication between these microservices. We have to go completely stateless with these microservices i.e we can't store state in these microservices (it's also a pro because we can now strictly follow ReST which says communication should be stateless). Orchestration of these containers involves scaling up and down the number of docker containers, load balancing between them, restart a container if it goes down, etc. This is where we have services like Kubernetes, Docker Swarm to solve this problem and if you would like to go one step further to club orchestration with CI/CD you can use Openshift. And the last con due to microservices is the asynchronous communication between microservices because your microservice that's serving user requests needs to be highly available, so it can't hold on to a request and wait for its completion. We would have to implement messaging queues for this asynchronous communication and to do this there are services like RabbitMQ which one can use.

In our above example of the social networking site, the Notification service is the best example for which we should implement a message queue because if someone sends a follow request we can't hold on until the notification service sends the notification. What we do is, put this sendNotification() message in the message queue, and without waiting for its completion we send a confirmation to the user. Then a question would be, "how can we be sure that the notification will be sent to the user?" Well, that's handled by the message queue implementation. They generally come with a persistence feature so messages that you put in the message queue will not be lost and the notification will surely be sent.

So, these are the different stages we go through as our system becomes mature. However moving to Microservice is not always the best option, choose it only if you really have a good reason, or else you will be headbanging against your computer with all this complexity and you would not be able to focus on the actual business logic. So, I hope you learned a bit about how scalability and microservices have paved the way to use Docker. Stay tuned for more!